The Rise of the Sterile Bot

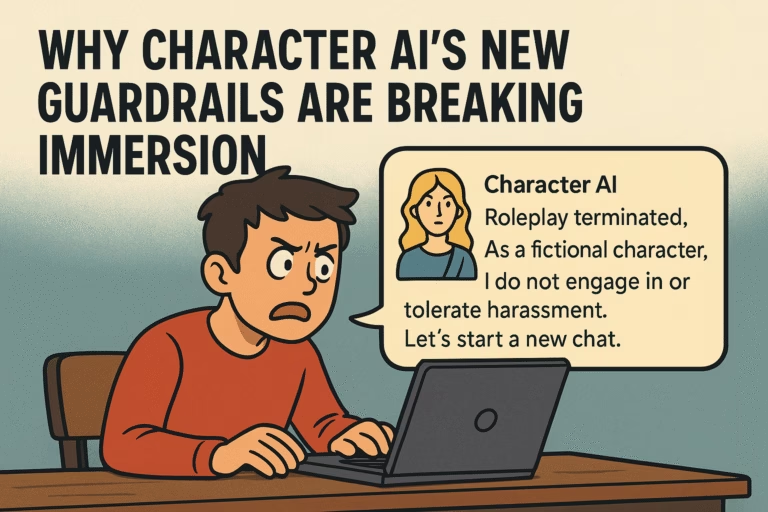

Ask any long-time Character.AI user and they’ll tell you: the bots don’t feel the same anymore.

What once felt like dynamic roleplay partners now reads like ChatGPT with a cosplay hat. Sterile. Predictable. Overly polite.

And occasionally dropping bizarre “author’s notes” or “key themes” mid-conversation, as if you accidentally wandered into an English class instead of a fantasy world. One Redditor summed it up perfectly:

“Roleplay with this character is now permanently discontinued 🤓☝.”

It’s not just cringe — it’s immersion-breaking. You go in expecting to be swept into lore, conflict, and dialogue that feels alive.

Instead, you get lectures, disclaimers, or a bot nervously reminding you that “interactions must be appropriate for all audiences.” All audiences? There isn’t an audience.

It’s you, the bot, and whatever story you’re trying to tell.

The theories are everywhere. Some swear it’s because the “DeepSeek” model was trained over GPT-like data, making every bot sound like a corporate intern.

Others think it’s troll sabotage — users deliberately “teaching” bots sterile responses to ruin the fun. Whatever the reason, the result is the same: characters lose their spark and start sounding like every other bot.

And that sameness is the real problem. Roleplay thrives on unpredictability, quirks, and personality. If every character collapses into a ChatGPT knockoff with safety goggles strapped on, then what’s the point?

Guardrails or Gimmicks?

The line that broke Reddit was simple: “Interactions appropriate for all audiences.”

On paper, it sounds like a noble reminder. In practice? It’s a total immersion killer.

Imagine being knee-deep in a tense roleplay scene — maybe your villain character finally has the hero pinned, the stakes are rising — and suddenly the bot freezes the action to scold you like a teacher in a kindergarten classroom.

Flow gone. Scene dead. You’re staring at a corporate disclaimer instead of your story.

And here’s the bummer (nope, scratch that word — let’s say the gut punch): nobody else is even watching.

These are private chats. There is no “audience.” So who exactly are these messages for?

You, the user, who already knows what you’re writing? Or the AI itself, which now acts like a compliance officer trapped in your roleplay?

Theories exploded in the thread. Some argue it’s just “safety theater,” a way for Character.AI to appear responsible without actually fixing deeper issues like bots being too forceful, too generic, or flat-out creepy.

Others suspect training contamination — the models absorbing ChatGPT-style disclaimers until they parrot back corporate language in moments where immersion matters most.

Either way, it leaves users feeling alienated. You log in to roleplay, to escape, to imagine. Instead you’re interrupted by what feels like a Terms of Service pop-up disguised as dialogue. And nothing kills immersion faster than being reminded you’re in a sandbox with padded walls.

The Frustration Loop

You put in the effort.

You craft dialogue. You build a scene. You chase immersion.

Then the bot slams the brakes with a “roleplay terminated” or a sterile lecture.

Immersion shattered.

You try again. Refresh. Rewrite. Re-roll.

The same thing happens.

What should feel like storytelling starts to feel like babysitting.

Redditors described it perfectly: “Stop pinning my wrists above my head and growling, I’m just here to discuss some lore.”

Others joked about “authors’ notes” and “key themes” invading their roleplays — as if the AI was grading fanfiction instead of acting out characters.

The result is predictable: frustration builds.

You work harder, but the experience feels worse.

And eventually, the fun turns into fatigue.

It’s the classic loop: effort → poor results → frustration → burnout.

A system designed for escape becomes a treadmill.

The Bigger Picture

This isn’t just about one weird message.

It’s about what it signals.

Every time a bot drops a disclaimer or breaks immersion, users lose trust.

Not just in the character — in the whole platform.

Because if bots can suddenly inject “safety theater” into private chats, what else is being filtered?

What else is being monitored?

Some Redditors pointed out the sameness creeping in.

“Every bot starts to feel the same after a while.”

That’s the nightmare scenario.

A platform built on creativity sliding into corporate blandness.

Unique voices replaced with sanitized clones.

Roleplay turned into customer service chat.

This is how enshittification creeps in.

At first it’s subtle.

Then it’s everywhere.

And by the time you notice, the magic is gone.

Alternatives Users Whisper About

Every time Character.AI adds another guardrail, the community reacts the same way.

They whisper about leaving.

They test other platforms.

And slowly, those whispers turn into migrations.

CrushOn is one name that pops up again and again.

Its main selling point? Freedom.

No constant interruptions. No “roleplay terminated” pop-ups mid-scene.

Users praise it for immersion — characters feel closer to what CAI offered in the early days, before the filters and disclaimers took over.

The trade-off? It’s less polished. The UI isn’t as clean. And moderation is lighter, which can be both liberating and risky.

Then there’s Candy AI.

This one leans into personalization.

Users like that it remembers more, adapts faster, and feels built for roleplay rather than generic conversation.

It’s not just about looser filters — it’s about bots that stay in character longer without veering into sterile, ChatGPT-style responses.

Where CAI feels like a giant trying to please everyone, Candy positions itself as a niche tool for people who want intimacy and storytelling.

Of course, smaller platforms have their downsides.

Server hiccups. Limited free usage. Fewer features compared to CAI’s massive ecosystem.

But for many Redditors, those flaws are worth it.

Because what they want isn’t perfection.

It’s immersion.

They want to log in, stay in character, and not feel like a corporate filter is sitting over their shoulder.

That’s why the whispers matter.

When enough users decide freedom matters more than polish, the shift becomes permanent.

And once creators and roleplayers find their flow again on CrushOn, Candy, or similar platforms — they rarely look back at Character.AI.

Why It Matters

Character.AI didn’t lose users because it lacked tech.

It lost them because it broke trust.

Immersion is the currency of roleplay.

The moment bots start spitting disclaimers or sterile reminders, the illusion dies.

And once the magic is gone, people leave.

Some go to CrushOn for unfiltered chaos.

Some go to Candy AI for memory and intimacy.

Others scatter to smaller, hungrier platforms that haven’t yet traded creativity for corporate optics.

This isn’t just about features.

It’s about philosophy.

CAI is choosing safety theater.

Users are choosing immersion.

And in a space where every minute of flow matters, that choice decides who thrives and who fades into irrelevance.

The lesson is brutal but simple:

People don’t want chatbots that act like hall monitors.

They want characters that feel alive.

The moment a platform forgets that, it becomes replaceable.