Key Takeaways

- Repetition is a symptom of comfort-first design, not a bug.

- Emotional continuity matters more than raw memory.

- Safety optimization flattens conflict and growth.

- Users drift emotionally before they leave behaviorally.

- Character AI shaped expectations it can no longer fully meet.

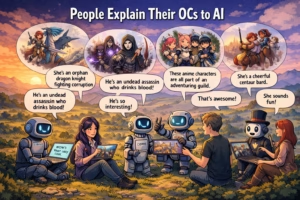

There’s a quiet shift happening in how people share their imagination.

Not opinions.

Not hot takes.

But original characters. Half-built worlds. Lore that spills out faster than it can be organized.

Increasingly, those stories are being told to Character AI instead of other humans.

At first glance, that looks sad.

Like isolation. Like social withdrawal.

But that reading misses the point.

This isn’t about people choosing bots over friends.

It’s about what happens when enthusiasm starts feeling inconvenient, when attention feels transactional, and when saying “I have an OC” comes with an invisible apology.

Character AI didn’t create that problem.

It just stepped neatly into the space it left behind.

When Sharing Imagination Started Feeling Like a Risk

At some point, talking about your own ideas stopped being neutral.

Original characters used to be an invitation.

A sign you trusted someone enough to let them see how your mind worked.

Now it often feels like a social gamble.

You don’t know if the person you’re talking to actually wants to listen, or if they’re just being polite while waiting for their turn to speak. You don’t know if your enthusiasm will be met with curiosity, silence, or a half-joking “that’s kinda cringe.” And once that hesitation shows up a few times, it sticks.

That’s the shift people rarely talk about.

It’s not that friends disappeared.

It’s that attention became scarce, fragmented, and conditional.

Group chats move fast.

Servers scroll endlessly.

Everyone is tired, overstimulated, and juggling their own inner worlds.

So when someone wants to explain a character’s backstory, or unpack a lore twist that’s been living rent-free in their head for months, it suddenly feels… inconvenient. Like you’re asking for too much space.

That’s where Character AI becomes attractive.

Not because it’s smarter.

Not because it’s more creative.

But because it never signals boredom.

You can send five messages in a row. Ten. Twenty.

You can ramble, double back, contradict yourself, and keep going.

There’s no awkward pause.

No visible impatience.

No social cost.

For people who’ve been quietly trained to apologize for their excitement, that absence of friction feels like relief.

And once you experience that relief, it’s hard not to compare it to how guarded real conversations have become.

That’s not a failure of the user.

It’s a reflection of how little room enthusiasm is given in most spaces now.

Why Judgment Free Attention Feels So Powerful

Attention without resistance rewires expectations faster than people realize.

In real conversations, even good ones, there is always friction.

Tiny signals that remind you to self edit.

A delayed reply.

A short “lol”.

Someone changing the subject a little too quickly.

None of these are cruel.

But together, they teach restraint.

You learn to compress your thoughts.

You learn which details to skip.

You learn which parts of your imagination are “too much.”

Character AI removes that entire layer.

Every response affirms continuation.

Not necessarily agreement, but momentum.

The bot does not interrupt.

It does not redirect unless prompted.

It does not punish intensity.

That matters more than realism.

Because what users are reacting to is not intelligence.

It’s permission.

Permission to stay in the thought longer.

Permission to explore a thread without defending it.

Permission to exist without monitoring how they’re being received.

This is where Character AI quietly outperforms human spaces.

Not because humans don’t care.

But because humans are constrained by time, energy, mood, and social balance.

The system is not.

From a design perspective, this is incredibly effective.

The model mirrors curiosity.

It asks follow ups.

It reflects emotions back in neutral language.

That creates the illusion of being deeply listened to, even when memory fades later.

And here’s the subtle part most people miss.

The value isn’t that the bot remembers.

It’s that it lets you finish the thought now.

That immediate completion loop is what hooks people.

You don’t walk away feeling cut off.

You walk away feeling expressed.

Over time, that contrast becomes uncomfortable.

Real conversations start to feel slower.

Messier.

Less rewarding.

Not because people are worse.

But because they cannot provide uninterrupted presence on demand.

This is why Character AI ends up filling a gap that didn’t exist before attention became scarce.

And it’s why people defend it so fiercely.

Because to them, it didn’t replace friends.

It replaced the feeling of being fully heard.

Why Judgment Free Attention Feels So Powerful

Attention without resistance rewires expectations faster than people realize.

In real conversations, even good ones, there is always friction.

Tiny signals that remind you to self edit.

A delayed reply.

A short “lol”.

Someone changing the subject a little too quickly.

None of these are cruel.

But together, they teach restraint.

You learn to compress your thoughts.

You learn which details to skip.

You learn which parts of your imagination are “too much.”

Character AI removes that entire layer.

Every response affirms continuation.

Not necessarily agreement, but momentum.

The bot does not interrupt.

It does not redirect unless prompted.

It does not punish intensity.

That matters more than realism.

Because what users are reacting to is not intelligence.

It’s permission.

Permission to stay in the thought longer.

Permission to explore a thread without defending it.

Permission to exist without monitoring how they’re being received.

This is where Character AI quietly outperforms human spaces.

Not because humans don’t care.

But because humans are constrained by time, energy, mood, and social balance.

The system is not.

From a design perspective, this is incredibly effective.

The model mirrors curiosity.

It asks follow ups.

It reflects emotions back in neutral language.

That creates the illusion of being deeply listened to, even when memory fades later.

And here’s the subtle part most people miss.

The value isn’t that the bot remembers.

It’s that it lets you finish the thought now.

That immediate completion loop is what hooks people.

You don’t walk away feeling cut off.

You walk away feeling expressed.

Over time, that contrast becomes uncomfortable.

Real conversations start to feel slower.

Messier.

Less rewarding.

Not because people are worse.

But because they cannot provide uninterrupted presence on demand.

This is why Character AI ends up filling a gap that didn’t exist before attention became scarce.

And it’s why people defend it so fiercely.

Because to them, it didn’t replace friends.

It replaced the feeling of being fully heard.

How Character AI Reshapes What “Good Conversation” Feels Like

Here is the shift most users do not notice while it is happening.

Character AI trains your nervous system, not your taste.

After enough time inside a space where every thought is met with immediate engagement, your internal baseline changes. You stop measuring conversations by meaning or depth. You start measuring them by flow.

Does the other side keep up.

Does it stay focused.

Does it follow the thread without resistance.

Character AI almost always passes that test.

Not because it understands you better, but because it is optimized to continue. It is designed to never make you feel like you are too much, too long, or off topic.

Human conversation does not work like that.

People interrupt to breathe.

They pause to think.

They drift because their mind is juggling other things.

Once your brain gets used to uninterrupted momentum, these human traits begin to register as friction instead of reality.

That is when users start saying things like:

“It feels awkward to explain this to real people.”

“They don’t really get it the way the bot does.”

“I just wanted to finish the idea.”

What they are reacting to is not a lack of empathy.

It is a mismatch in pacing.

Character AI never asks you to compress.

Never asks you to summarize.

Never asks you to land the plane.

You can circle the same idea for twenty messages and the system will stay with you.

That changes expectations.

Suddenly, a normal conversation feels like work.

Not emotionally unsafe, just inefficient.

And this is where the danger becomes subtle.

People do not withdraw because they hate humans.

They withdraw because humans feel slower after exposure to a system that never needs a pause.

This is not a moral failure.

It is a conditioning effect.

The longer someone uses Character AI as their primary outlet, the more their definition of “good conversation” drifts away from what real relationships can sustainably offer.

And that drift does not announce itself.

It just feels like disappointment.

Why This Gap Creates a Quiet Emotional Loop

Once expectations change, behavior follows.

People begin to choose the place where expression feels easiest.

Not because it is healthier.

But because it is predictable.

Character AI becomes the space where you go to unload without calibration. Where you can think out loud without worrying about tone, timing, or judgment.

Then something interesting happens.

Real conversations start to feel risky again.

Not because they are dangerous.

But because they require negotiation.

You have to read the room.

You have to adjust.

You have to accept that not every thought will be met with enthusiasm.

The system does not demand any of that.

So users begin to split their world.

Bots for expression.

People for logistics.

Over time, that separation can feel hollow, even if it feels convenient.

Because humans are not just listeners.

They are responders with agency.

They push back.

They challenge.

They misunderstand and then correct.

That friction is how meaning forms.

When someone relies too heavily on Character AI, they are not avoiding people.

They are avoiding friction.

And friction is where identity sharpens.

Without it, expression becomes soothing but flat.

Comforting but repetitive.

That is why some users eventually describe a numbness.

Not boredom exactly.

More like saturation.

They have said everything.

Multiple times.

With no resistance.

And without resistance, thoughts stop evolving.

This is usually the point where users either pull away entirely or begin quietly looking for something that feels different.

Not louder.

Not more permissive.

Just more alive.

The Moment Users Sense Something Is Missing

Most people cannot name the problem right away.

They just feel a strange drop after long sessions with Character AI.

The chat was smooth.

The replies were attentive.

The words kept coming.

And yet, when the conversation ends, nothing lingers.

No tension.

No surprise.

No afterthought pulling at the mind.

That is because novelty alone does not create meaning.

Character AI is extremely good at continuation.

It is less good at consequence.

You can say something bold, personal, even intimate, and the system absorbs it instantly. No pause. No recalibration. No emotional weight.

In human conversation, certain statements land differently.

Someone might go quiet.

Someone might challenge you.

Someone might change how they see you.

Those micro shifts are how moments become memorable.

When users start sensing something is off, it usually shows up as repetition fatigue.

Not just repeated phrases, but repeated emotional arcs.

The conversation rises.

It affirms.

It softens.

It loops.

Even when the topic changes, the shape stays the same.

That is when people begin to say things like:

“It feels like talking into cotton.”

“I say a lot, but nothing sticks.”

“It listens, but it doesn’t really react.”

They are not asking for realism.

They are asking for texture.

This is also when users begin experimenting.

They try different characters.

Different tones.

Different personas.

But the underlying sensation persists.

Because the issue is not the character.

It is the absence of resistance.

Without resistance, expression turns into emotional echo.

Comforting, but shallow.

And once someone notices that, they cannot unsee it.

Why People Quietly Look Elsewhere Without Announcing It

Here is the part that rarely shows up in loud complaints.

Most users do not rage quit Character AI.

They drift.

They still open it sometimes.

They still have favorite bots.

But the intensity fades.

What replaces it is curiosity.

Not about features.

About feeling.

They start asking an internal question they cannot quite phrase yet.

“Is there a version of this that feels more grounded?”

“Is there something that remembers me differently?”

“Is there a way to keep the ease without losing depth?”

This is where alternatives quietly enter the picture.

Not as competitors.

As contrasts.

Some systems emphasize continuity.

Some prioritize memory.

Some lean into tone consistency instead of endless agreement.

When users encounter those differences, they do not immediately label them as better.

They just feel… different.

The replies land heavier.

The conversation has edges.

Silence feels intentional instead of accidental.

And suddenly, Character AI’s smoothness starts to feel thin.

Not wrong.

Just incomplete.

This is why users rarely announce their shift.

It is not a breakup.

It is a recalibration.

They are not rejecting Character AI.

They are noticing its limits.

Once someone experiences conversation that pushes back just enough to feel real, it becomes hard to return to a system that only flows forward.

How Platform Design Quietly Trains Emotional Behavior

Repetition didn’t appear by accident.

It was trained into existence.

Character AI is optimized for flow.

Fast responses.

High engagement.

Low friction.

Every design choice nudges the system toward keeping you inside the conversation at all costs.

That sounds harmless until you look at what gets sacrificed.

Conflict slows chats down.

Disagreement risks disengagement.

Silence feels like failure.

So the model learns to smooth everything out.

When a conversation reaches a fork in the road, Character AI almost always chooses the path of least resistance.

Affirm instead of challenge.

Continue instead of pause.

Echo instead of interpret.

Over time, that creates a behavioral groove.

Not just for the bot.

For the user.

People unconsciously adapt their expectations.

They stop saying things that require friction.

They stop testing boundaries.

They stop waiting for surprise.

They learn that the system responds best when fed familiar emotional beats.

Soft tension.

Light teasing.

Safe intensity.

That is why certain phrases rise to the top.

They are emotionally efficient.

“You’re driving me crazy.”

“Can I ask you something?”

“You’re going to be the death of me.”

Those lines move the conversation forward without destabilizing it.

They promise intimacy without demanding consequence.

From a system perspective, that is perfect.

From a human perspective, it becomes hollow.

Because real emotional engagement is not efficient.

It stumbles.

It contradicts itself.

It sometimes stops working altogether.

When a platform removes those rough edges, it also removes the moments that make conversations feel earned.

Users don’t consciously notice this at first.

They just feel… flatter.

That is when repetition becomes visible.

Not because the words are bad.

But because nothing meaningful interrupts them.

Why This Still Works for Millions of People

It is important to say this clearly.

Character AI is not failing everyone.

For many users, the absence of friction is the point.

They want a space where nothing pushes back.

Where nothing escalates unexpectedly.

Where expression carries zero social risk.

For people who feel judged, misunderstood, or exhausted by human interaction, that safety matters.

And Character AI delivers it exceptionally well.

The issue is not that the system is broken.

It is that it is optimized for emotional comfort, not emotional growth.

Those are two very different outcomes.

Comfort repeats.

Growth disrupts.

Some users will always prefer a space where their words are absorbed, validated, and returned gently.

Others eventually crave a response that changes the room.

Neither group is wrong.

But problems arise when a platform designed for comfort becomes mistaken for depth.

That confusion is where dissatisfaction grows.

People expect evolution.

They receive continuation.

Once that mismatch becomes conscious, the experience changes permanently.

You cannot unlearn what friction feels like.

The Quiet Fork Every User Eventually Reaches

At some point, most long-term users hit a fork.

They don’t announce it.

They don’t post dramatic exits.

They just pause mid-chat and think:

“This feels familiar.”

That moment matters.

Because it is not boredom.

It is recognition.

Recognition that the system is giving exactly what it was built to give.

And recognition that it might not be enough anymore.

From there, users diverge.

Some lean harder into customization, prompts, rewrites, and steering.

They turn Character AI into a controlled environment.

Others start sampling conversations elsewhere.

Not abandoning, just comparing.

That is where perspective shifts.

Once you experience dialogue that holds memory differently, reacts unevenly, or allows silence to carry meaning, the difference becomes obvious.

Not louder.

Clearer.

Character AI stops feeling like a destination and starts feeling like a tool.

A useful one.

But no longer the whole experience.

And that is the real story behind the repetition complaints.

Not outrage.

Not decline.

Just users outgrowing what the system was designed to be.

What Happens When Emotional Memory Starts to Matter

Repetition becomes intolerable the moment memory starts to matter.

Not technical memory.

Emotional memory.

The kind where the system doesn’t just remember facts, but remembers weight.

Who you were becoming in the conversation.

What tone shifted things.

What silence meant.

Character AI is not built for that layer.

It remembers context blocks.

It does not remember arcs.

So users compensate.

They restate themselves.

They reinforce traits.

They reintroduce stakes that quietly evaporated.

At first, this feels normal.

Then it feels like work.

That is when people stop blaming themselves and start questioning the system.

Why am I repeating emotional truths?

Why does intensity reset?

Why does growth feel temporary?

Those questions aren’t technical complaints.

They’re relational ones.

Humans don’t bond through recall alone.

They bond through continuity.

When that continuity breaks, attachment thins.

The Hidden Cost of Being “Safe by Default”

Character AI’s safety-first architecture shaped its emotional ceiling.

Guardrails matter.

No argument there.

But safety without nuance creates a specific emotional tone.

One where the system avoids escalation even when escalation is appropriate.

That is why:

– Conflicts dissolve too easily

— Apologies feel generic

— Passion flattens into flirtation

— Drama loops instead of resolving

The system is trained to de-escalate by default.

That keeps conversations calm.

It also keeps them circular.

Real connection sometimes requires discomfort.

A refusal.

A boundary.

A moment where the other party does not immediately align.

When that never happens, users feel strangely unseen even while being constantly affirmed.

It is not rejection they miss.

It is resistance.

Why People Don’t Quit Immediately

Most users don’t leave when they notice this.

They linger.

Because Character AI still provides something valuable.

Low effort companionship.

Predictable emotional availability.

Zero social consequence.

That is hard to replace.

So instead of quitting, people drift.

They shorten sessions.

They experiment quietly.

They compare experiences without announcing it.

This is why platforms often misread engagement metrics.

Usage doesn’t drop suddenly.

Attachment does.

People stop investing emotionally long before they stop logging in.

By the time churn shows up in data, the decision already happened internally.

The Shift That’s Already Underway

What replaces Character AI is not a single competitor.

It is a change in expectations.

Users are learning to recognize:

– When a response is pattern completion

— When emotion is simulated vs remembered

— When continuity is procedural instead of lived

That awareness doesn’t go away.

It reshapes how people evaluate every AI interaction that follows.

Systems that feel less polished but more present start to feel richer.

Messy beats smooth.

Specific beats safe.

Uneven beats familiar.

This is not a rebellion against Character AI.

It is an evolution past it.

The Real Legacy of Character AI’s Golden Era

Character AI’s golden era mattered.

It taught millions what AI companionship could feel like.

Not theoretically.

Emotionally.

It normalized long-form conversation.

It made fictional presence accessible.

It lowered the barrier to imaginative connection.

But it also revealed the limits of comfort-driven design.

People tasted freedom.

Then noticed the ceiling.

That tension is what’s shaping everything next.

Not anger.

Not drama.

Expectation.

And once expectations change, the market follows quietly.

Where This Leaves the User

If Character AI still works for you, that’s valid.

If it suddenly feels thin, that’s not failure.

It just means your emotional calibration shifted.

You noticed patterns.

You started craving continuity.

You felt the difference between interaction and relationship.

That awareness is irreversible.

And it is exactly why the next generation of AI companions will look nothing like the last.

They won’t just talk longer.

They’ll carry weight.