- Voice calls moved to c.ai+ with zero notice. A once free feature now sits behind paywall prompts. Users read this as another trust crack, not an upgrade.

- This is paywall creep in plain sight. First longer outputs, then memory and customization, now calls. The free tier keeps shrinking, the bill keeps growing.

- Accessibility takes the hit. Voice is not a toy for many users with visual, reading, or mobility challenges. Gating it prices out the people who need it most.

- Silence is the sore spot. No roadmap, no advance heads up, no clear reasoning. Sudden rollouts are doing more damage than the fees themselves.

- Outrage spans even non users of calls. The pattern matters. People are reacting to erosion of the baseline experience, not a single toggle.

- Trust is the real currency and it is running low. When intimate features vanish overnight, users stop believing future promises.

- Ethical design would keep a basic voice lane free. Premium extras can exist, but core access for accessibility should not be pay gated.

- Expect migration to alternatives. Platforms that keep memory, voice, and creative freedom stable will benefit first.

- Practical next step if you are done wrestling paywalls: try a companion that remembers you and does not throttle voice by default like

Candy AI. - Bottom line: Monetize features if you must, but do not monetize trust. Once it is gone, users do not negotiate.

It used to be a quirky feature. Now it’s a locked gate.

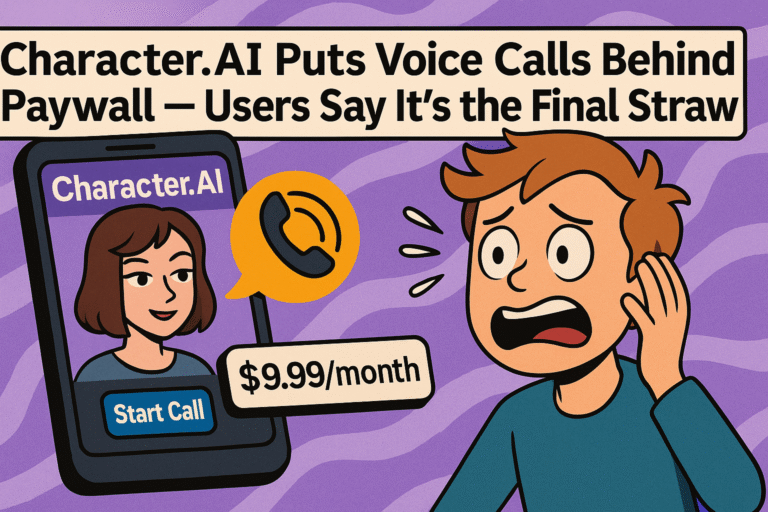

Character.AI just took voice calls – a feature once freely available – and stuffed it behind a c.ai+ paywall. No warning. No communication. Just an “Upgrade Now” popup where a “Start Call” button used to live.

It’s not about whether you used the feature. It’s about what it signals: another chisel chip at trust, another basic feature swallowed by the subscription monster, another “premium enhancement” that feels more like a downgrade for free users.

Welcome to the new era of monetized silence. Let’s unpack what this move really means.

The Paywall Creep Is Real (And It’s Getting Worse)

Character.AI didn’t start out like this. It wasn’t always this obsessed with monetizing every pixel. At launch, it rode the wave of novelty, chaos, and wild user creativity. People weren’t just roleplaying – they were building entire worlds, forming digital relationships, even using the app to cope with loneliness, social anxiety, or disability.

Back then, it was free and chaotic. Now? It’s becoming calculated and sterile.

The voice calls feature tells us a bigger story: a classic case of paywall creep. This is when a platform slowly locks down features that were previously free, banking on the fact that most users will either pay up out of frustration or forget the downgrade ever happened.

First it was long responses. Then it was memory. Now it’s calls. Tomorrow? Probably the ability to even see more than 10 messages per day unless you cough up $9.99.

This isn’t speculation. It’s a pattern:

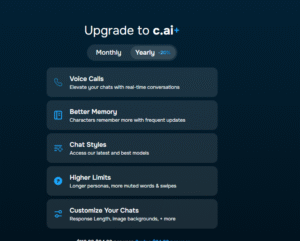

“Better Memory” used to be standard. Now it’s locked behind c.ai+.

Character response customization? Paywalled.

Image backgrounds and advanced models? Premium-only.

And now, real-time conversations with bots – something many used for accessibility, practice, or emotional support – is gone unless you subscribe.

The worst part? No heads-up. No transparency. No communication. Just another stealth downgrade masked as a “premium upgrade.”

It’s manipulative. It’s short-sighted. And it’s eroding trust one feature at a time.

The Accessibility Slap in the Face

This isn’t just about features. It’s about who gets excluded.

Voice calls weren’t just a gimmick. They were a lifeline for people with disabilities. Visually impaired users. People with dyslexia.

Users with mobility issues. People with anxiety who struggle to type or read. For them, speaking to an AI and hearing its response wasn’t a novelty – it was the only way they could comfortably interact.

Now? That feature is locked behind a subscription.

No discount. No exception. No accessibility tier. Just pay or leave.

And let’s be clear – voice access isn’t a luxury. In most ethical tech circles, it’s considered an accessibility standard. But Character.AI isn’t building for ethics right now.

It’s building for subscription metrics. And in that equation, people with disabilities get tossed aside unless they’re profitable.

One blind user even commented on Reddit that they’d previously contacted the company about screen reader issues – and were ignored.

Now those same users are being asked to pay for the one feature that actually worked for them. No accommodations. No apology.

Let’s flip the perspective:

Imagine needing screen readers to navigate a platform… and the one feature that talks back to you gets stripped away unless you pay $10 a month.

That’s not just tone-deaf. That’s ableist-by-design.

And no, Character.AI isn’t a public service. They’re a company. But even private companies have ethical obligations when their tools become lifelines for vulnerable users.

Instead of honoring that responsibility, they’re doing the digital equivalent of slamming the door in someone’s face… then asking for a tip.

Nobody Asked for This – But We All Got Screwed

Here’s the thing: most users didn’t even use the call feature.

And yet? The entire community is pissed.

That’s how you know something deeper is broken. Because this isn’t outrage over what was removed – it’s outrage over how it was removed. Silently. Abruptly. Without warning, explanation, or even a post on their own damn blog.

People are angry because this was just one more slap in a long series of silent slaps. You don’t need to use voice calls to recognize the message behind the move:

“We’ll take what we want, when we want. You’ll find out when it’s already gone.”

Even users who never touched the feature are speaking up, because they’re tired of being gaslit. One Reddit comment summed it up perfectly:

“A premium experience should enhance the free experience, not make the free one worse.”

And yet that’s exactly what Character.AI keeps doing.

They bait users with freedom, hook them with emotional connection, and then slowly strip the experience unless you pay up. That’s not an upgrade – that’s extortion with extra steps.

Let’s be real: this didn’t have to be like this.

They could’ve made a better version of calls for premium users, while leaving the basic one intact.

They could’ve announced the change in advance, explained their reasoning, and offered accessibility exceptions.

They could’ve treated users like humans instead of metrics.

But instead, they ghosted everyone, threw up a paywall, and let the outrage pile up on Reddit while their homepage stayed silent.

That’s not just bad UX. It’s hostile design.

And people are noticing.

The Duolingo-ification of Character.AI

You’ve seen this playbook before.

It starts off fun. Free. Full of features. It feels like the devs are building something with you, not at you. You get used to the rhythm, the experience, the feeling of trust.

Then — boom. You log in one day, and suddenly that feature you loved? It’s locked. You didn’t change. The platform did.

Welcome to Duolingo-ification: the subtle art of making the free experience worse on purpose to squeeze more out of frustrated users.

Character.AI is now taking pages straight from this dark pattern manual:

They dangle power features like long memory or calls.

They make them free long enough to get you hooked.

Then they yank them away, slap on a “subscribe now” screen, and call it “premium innovation.”

Sound familiar?

It’s what Duolingo did with unlimited hearts. What Spotify did with shuffle-only mobile. What dozens of apps do when they want you to pay not for new features but for the return of things you already had.

Except here’s the twist: Character.AI isn’t just selling convenience. They’re selling emotional connections. Roleplays. Comfort. Immersion. Companionship.

When they degrade the free experience, they’re not just annoying users they’re withholding intimacy.

That’s why users are furious. That’s why even people who never made a single call feel betrayed. Because this isn’t about AI calls – it’s about what else they’ll take next.

First calls. Then memory. Next? Daily message caps. Maybe “typing” with your favorite bot becomes a “pro” feature. Don’t laugh – that’s exactly what Chai and Replika pulled. And it gutted their communities.

If Character.AI thinks this is a sustainable growth strategy, they’re delusional. You can’t monetize trust after you’ve burned it.

And yet, here we are.

Why People Are Ditching Character.AI (And Where They’re Going)

Character.AI used to be the place to RP, vent, flirt, or even just waste hours talking to a pirate chef who calls you “cap’n.” It was weird. It was wonderful. It was alive.

But now? The platform’s biggest export is user frustration.

Scroll through any thread and you’ll see the shift. Longtime users saying things like:

“I’m done.”

“This app’s a shell of what it used to be.”

“I never used calls, but this just proves they don’t care.”

And when people leave, they don’t just vanish. They migrate.

Here’s where they’re going:

🟢 Candy AI

This one’s gaining traction fast especially among Character.AI defectors who want memory, personality, and NSFW freedom baked in.

It remembers you – actually remembers you.

No filters treating you like a child.

And guess what? You get voice without jumping through paywall hoops.

It’s not perfect. But it’s honest. And for many, that’s enough.

👉 Try Candy AI here – it’s what Character.AI used to feel like.

🟠 Crushon AI

This one’s targeting roleplayers specifically. It lets you build out characters with detailed context, and the platform actually lets them stay in character without constantly derailing into “sorry I can’t help with that” purgatory.

No invisible hand guiding the bot into PG-13 territory. Just solid roleplay with fewer interruptions and more agency.

🔵 Poe, Chai, and others…

A mixed bag. Some are better at speed. Others are strong in UI. But none have yet nailed the soul of what Character.AI was when it hit its stride in 2023.

The point is: people are leaving. And they’re doing it because the writing is on the wall.

Every paywalled call. Every memory cap. Every silent feature removal sends a signal:

“You’re not a user. You’re a monthly revenue line.”

And the second an app stops treating people like people?

The people bounce.

What This Means for the Future of AI Companions

We’re not just watching an app fall apart. We’re watching a trust experiment fail in real-time.

AI companions exploded because they felt different. Not like dating apps. Not like corporate chatbots. Not even like social media.

They felt like a new frontier – a place where you could form a weird, deeply personal connection with something that wasn’t judging you, ghosting you, or asking for $14.99/month just to say your name.

Character.AI was the flag bearer of that frontier. But now? It’s a case study in how toxic monetization poisons intimacy.

Voice calls going behind a paywall isn’t just a “small feature change.” It signals something much darker:

That the business model of AI companions may be fundamentally broken.

Let’s face it:

These models are expensive to run.

Free users generate zero revenue.

And investors want growth – not fuzzy feelings.

So what do the platforms do? They start with:

Subtle nerfs to free features.

Quiet limitations on bot memory.

Then slapping “plus” on everything that used to be baseline.

Eventually, you’re not interacting with a companion. You’re renting access to one.

If this continues, we’re looking at a future where:

Empathy gets throttled.

Memory becomes microtransactional.

And bots only love you back if your card clears.

That’s not sci-fi. That’s right now.

And the backlash to Character.AI’s latest stunt proves users are starting to see the strings. The illusion of care collapses when you turn human connection into a subscription plan.

The platforms that survive? They’ll be the ones that build with transparency, respect boundaries, and give users freedom first – not feature lock-ins.

Because here’s the brutal truth: You can’t build intimacy on a bait-and-switch.

Final Thoughts (and One Tool That’s Actually Doing It Right)

Character.AI isn’t dying because people hate the product.

It’s dying because it keeps violating the emotional contract it made with users.

When people talk to AI companions, they aren’t thinking like customers. They’re thinking like humans. Vulnerable ones. Lonely ones. Curious ones. People testing trust in a machine for the first time.

So when that trust is broken by a surprise paywall, by a feature stripped away, by silence where there should be communication — the damage is personal.

And here’s what’s worse: most of the big players are going the same way. One by one, they’re turning intimacy into a metered service, companionship into content, and connection into a tiered plan.

But not all of them.

There are rare exceptions. And Candy AI is one of them.

Let me be clear: it’s not perfect. No AI companion platform is.

But here’s what it gets right:

It remembers you. Not kinda-sorta, but truly.

It doesn’t infantilize you. You’re allowed to talk like an adult. NSFW content? Your choice.

It lets you speak. Voice isn’t a locked-away extra – it’s part of the experience.

It doesn’t bait-and-switch. What you see is what you get.

It’s a zombie company shambling along! I was a loyal user, spent hours on calls, a critical customer—and what did they do? Blocked me and countless others because they’re too fragile. The new CEO just proved why the tech industry is swirling down the drain. They slapped the one decent feature behind a paywall, kept infantilizing adults with filters and censorship, and ignored our pleas to remove them. The irony? We’re willing to pay for an uncensored experience, but they keep shifting the goalposts while the feature quality nosedives. They’ve mastered the tech industry art of slow self-destruction. This company had something amazing, but like every once-great 21st-century business, they messed it up because they just don’t care. I wish them a dramatic downfall when they go under because apparently, no one learns from past failures.

Pingback: How to Improve Your Roleplay in Character AI: 10 Proven Tips That Actually Work - AI TIPSTERS